Smooth Touchdown: Novel Camera-based System for Automated Landing of Drone on a Fixed Spot

2021/01/21

- Research

Researchers demonstrate automated drone landing using 2D camera-based symbol detection, with potential applications in rescue missions.

While autonomous drones can greatly assist with difficult rescue missions, they require a safe landing procedure. In a new study, scientists from Shibaura Institute of Technology (SIT), Japan, have demonstrated automated drone landing with a simple 2D camera guiding the drone to a symbolized landing pad. The guiding camera can be further improved to include depth-related information, paving the way for novel applications in indoor transportation and inspection.

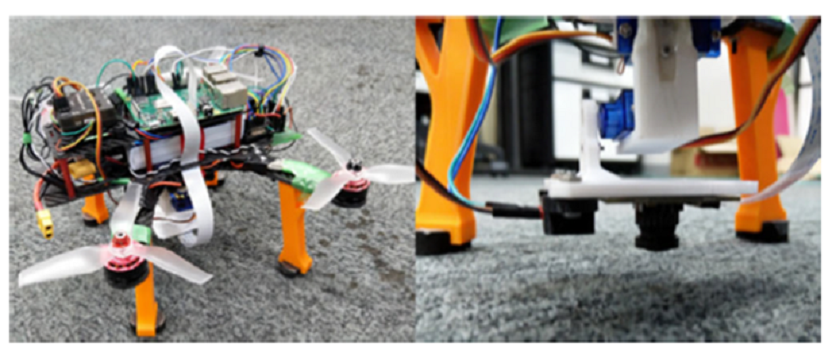

Title: The drone used in the study

Caption: A standard radio control-based drone, upgraded with necessary hardware and software and equipped with a simple 2D camera for the detection of a symbolized landing pad

Credit: Malik Demirhan and Chinthaka Premachandra in “Development of an Automated Camera-Based Drone Landing System,” published in IEEE Access by IEEE Xplore, under Creative Commons license CC BY-NC-ND 4.0

“While it is desirable to automate the landing using a depth camera that can gauge terrain unevenness and find suitable landing spots, a framework serving as a useful base needs to be developed first,” observes Dr. Chinthaka Premachandra from Shibaura Institute of Technology (SIT), Japan, whose research group studies potential applications of camera-based quadrocopter drones.

Accordingly, Dr. Premachandra and his team set out to design an automatic landing system; they have detailed their approach in their latest study published in IEEE Access. To keep things simple, they upgraded a standard radio control (RC)-based drone with necessary hardware and software and equipped it with a simple 2D camera for the detection of a symbolized landing pad.

“The challenges in our project were two-fold. On the one hand, we needed a robust and cost-effective image-processing algorithm to provide position feedback to the controller. On the other, we required a fail-safe switch logic that would allow the pilot to abort the autonomous mode whenever required, preventing accidents during tests,” explains Dr. Premachandra.

Eventually, the team came up with a design that comprised the following components: a commercial flight controller (for attitude control), a Raspberry Pi 3B+ (for autonomous position control), a wide-angle modified Raspberry Pi v1.3 camera (for horizontal feedback), a servo gimbal (for camera usage control), a Time-of-Flight (ToF) module (as feedback sensor for the drone height), a multiplexer (for switching between manual and auto modes), an “anti-windup” PID controller (for height control), and two PD controllers (for horizontal movement control).

In addition, they implemented an image-processing algorithm that detected a distinctive landing symbol (in the shape of “H”) in real time and converted the image pixels into physical coordinates, which generated a horizontal feedback. Interestingly, they found that introducing an adaptive “region of interest” helped speed up the computation of the camera’s vertical distance to the landing symbol, greatly reducing the computing time—from 12-14 milliseconds to a meagre 3 milliseconds!

Following detection, the system accomplished the landing process in two steps: flying towards the landing spot and hovering over it while maintaining the height, and then actually landing vertically. Both these steps were automated and therefore controlled by the Raspberry Pi module.

While examining the landing, the research team noticed a disturbance in landing behavior, which they attributed to an aerodynamic lift acting on the quadrocopter. However, this problem could be overcome by boosting the gain of the PID controller. In general, performance during the landing process indicated a properly functioning autonomous system.

With these results, Dr. Premachandra and his team look forward to upgrading their system with a depth camera and thus enabling drones to find even more applications pertaining to daily life. “Our study was primarily motivated by the application of drones in rescue missions—But it shows that drones can, in future, find use in indoor operations such as indoor transportation and inspection, which can reduce a lot of manual labor,” concludes Prof. Premachandra.

Reference

| Title of original paper: | Development of an Automated Camera-Based Drone Landing System |

| Journal | IEEE Access |

| DOI: | 10.1109/ACCESS.2020.3034948 |

Funding Information

This study was funded by the Branding Research Fund of SIT, Japan.Contact

Planning and Public Relations Section

3-9-14 Shibaura, Minato-ku, Tokyo 108-8548, Japan (2F Shibaura campus)

TEL:+81-(0)3-6722-2900 / FAX:+81-(0)3-6722-2901

E-mail:koho@ow.shibaura-it.ac.jp