Advanced Noise Suppression Technology for Improved Search and Rescue Drones

- Research

Researchers have developed a novel AI-based noise suppression system for more effective victim detection by UAVs during natural disasters.

Unmanned Aerial Vehicles (UAVs) are beneficial in search and rescue missions during natural disasters like earthquakes. However, current UAVs depend on visual information and cannot detect victims trapped under rubble. While some studies have used sound for detection, the noise from UAV propellers can drown out human sounds. To address this issue, researchers have developed a novel artificial intelligence-based system that effectively suppresses UAV noise and amplifies human sounds.

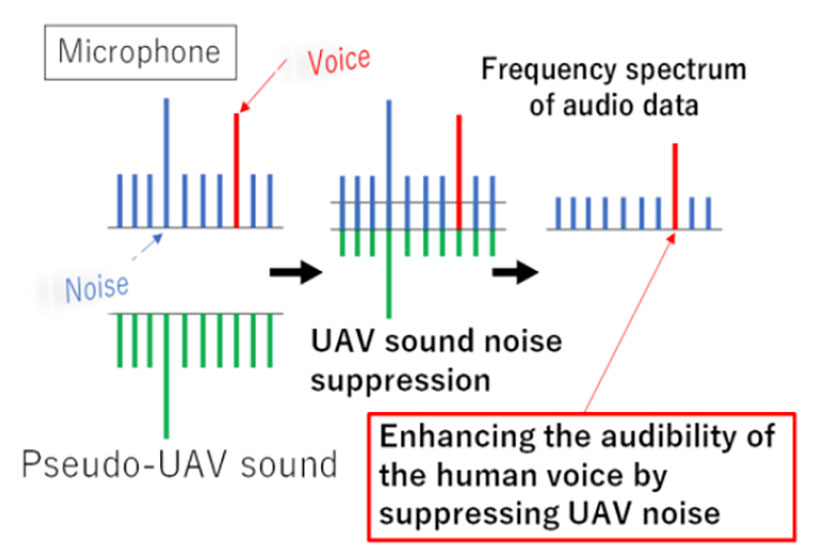

Title: Noise cancelling procedure used in the study

Caption: The innovative AI-based noise cancelling method generates a similar sound as the UAV noise and then substracts that sound from the sound captured by the UAV microphone to eliminate noise and therefore amplify human sounds.

Credit: Chinthaka Premachandra from Shibaura Institute of Technology

License type: CC BY-NC-ND 4.0

Usage restrictions: You are free to share the material. Attribution is required. You may not use the material for commercial purposes. You cannot distribute any derivative works or adaptations of the original work

Unmanned Aerial Vehicles (UAVs) have received significant attention in recent years across many sectors such as military, agriculture, construction, and disaster management. These versatile machines offer remote access to hard-to-get or hazardous areas and excellent surveillance capabilities. Specifically, they can be immensely useful in searching for victims in collapsed houses and rubble, in the aftermath of natural disasters like earthquakes. This can lead to early detection of victims, enabling rapid response.

Existing research in this regard has mostly focused on UAVs equipped with cameras that rely on images to search for victims and assess the situation. However, relying only on visual information can be insufficient, especially when victims are trapped under the rubble or in areas that fall in the blind spots of the cameras. Recognizing this limitation, some studies have focused on using sound to detect trapped individuals. However, since a UAV uses fast rotating propellers to fly, which are mounted on the drone themselves, their noise can drown out the farther human sounds, posing a significant challenge. It is, therefore, necessary to eliminate the noise of propellers and isolate the sound of trapped victims for effective detection.

While some studies have attempted to solve this problem by using multiple microphones to isolate the source of victims’ sound from the propellers along with speech recognition, the processed sound can make it difficult for the operator to accurately recognize the victim’s sounds. Moreover, such softwares use predetermined words to isolate human sounds, while the sound made by victims may vary based on the situation.

To address these issues, Professor Chinthaka Premachandra and Mr. Yugo Kinasada from the Department of Electronic Engineering at the School of Engineering in Shibaura Institute of Technology, Japan developed a novel artificial intelligence (AI)-based noise suppression system. Professor Premachandra explains, “Suppressing the UAV propeller noise from the sound mixture while enhancing the audibility of human voices presents a formidable research problem. The variable intensity of UAV noise, fluctuating unpredictably with different flight movements complicates the development of a signal-processing filter capable of effectively removing UAV sound from the mixture. Our system utilizes AI to effectively recognize propeller sound and address these issues.” The specifics of their innovative system were outlined in a study, made available online on December 01, 2023, and published in Volume 17, Issue 1 of the journal IEEE Transactions on Services Computing in January 2024.

At the heart of this novel system is an advanced AI model, known as Generative Adversarial Networks (GANs), which can accurately learn various types of data. It was used to learn the various types of UAV propeller sound data. This learned model is then used to generate a similar sound to that of the UAV propellers, called pseudo-UAV sound. This pseudo-UAV sound is then subtracted from the actual sound captured by the onboard microphones in the UAV, allowing the operator to clearly hear and therefore recognize human sounds. This technique has several advantages over traditional noise suppression systems, including the ability to effectively suppress UAV noise within a narrow frequency range with good accuracy. Importantly, it can adapt to the fluctuating noise of the UAV in real-time. These benefits can significantly enhance the utility of UAVs in search and rescue missions.

The researchers tested the system on a real UAV with a mixture of UAV and human sounds. Testing revealed that while this system could effectively eliminate UAV noise and amplify human sounds, there was still some remaining noise in the resulting audio. Fortunately, the current performance is adequate for a proposal of this system for human detection at actual disaster sites. Moreover, the researchers are currently working on further improving the system and addressing the remaining few issues.

Overall, this groundbreaking research holds great potential for the use of UAVs in disaster management. “This approach not only promises to improve post-disaster human detection strategies but also enhances our ability to amplify necessary sound components when mixed with unnecessary ones”, said Professor Premachandra, emphasizing the importance of the study. “Our ongoing efforts will help in further enhancing the effectiveness of UAVs in disaster response and contribute to saving more lives.”

Reference

| Title of original paper: |

GAN Based on Audio Noise Suppression for Victim Detection at Disaster Sites with UAV |

| Journal: | |

| Article link: | 10.1109/TSC.2023.3338488 |

Authors

About Professor Chinthaka Premachandra from SIT, Japan

Chinthaka Premachandra is currently a Professor at the Department of Electrical Engineering at the Graduate School of Science and Engineering, Shibaura Institute of Technology, Japan. He received the B.Sc. and M.Sc. degrees from Mie University, Tsu, Japan, in 2006 and 2008, respectively, and the Ph.D. degree from Nagoya University, Nagoya, Japan, in 2011. Before joining SIT in 2016, he served as the Assistant Professor with the Department of Electrical Engineering, Faculty of Engineering, Tokyo University of Science. At SIT, he is currently the Manager of the Image Processing and Robotic Laboratory. In 2022, he received the IEEE Sensors Letters Best Paper Award from the IEEE Sensors Council and the IEEE Japan Medal from the IEEE Tokyo Section in 2022. His research interests include AI, UAV, image processing, audio processing, intelligent transport systems (ITS), and mobile robotics.

Funding Information

This work was supported in part by the Japan Society for the Promotion of Science-Grant-in-Aid for Scientific Research (C) (Grant No. 21K04592).